As expected, the 9k frames failed again.īy now, it’s confirmed without a doubt that the hardware can pass 9k frames.

#VSPHERE MTU 9000 DRIVER#

Back to ESXi 6.0 with the bnx2x driver (the qfle3 driver is not listed as supported in 6.0, and we are running vCenter 6.0, so that limits the highest version we could use on the blade).Changed the driver used in ESXi 6.5 from the Broadcom bnx2x driver to the native qfle3 driver in ESXi.Ping of 8972 bytes with DF-bit set were successful The server wasn’t in production, so time wasn’t a critical factor. Given that the FEX Host Interface (HIF) was configured via port-profile, the port-profile is the same as working for the other blades, and that 9k frames were functioning in Windows, where’s the problem?īy this time, HPE had sent replacement CNAs (FLB and Mezz card), so the hardware should be good. I’ve omitted the output here but using 2345 bytes for the ping also resulted in a failure. A few other MTU sizes were tested until one was found which worked: vmkping -I vmk1 192.168.1.10 -d -s 2344

#VSPHERE MTU 9000 MAC#

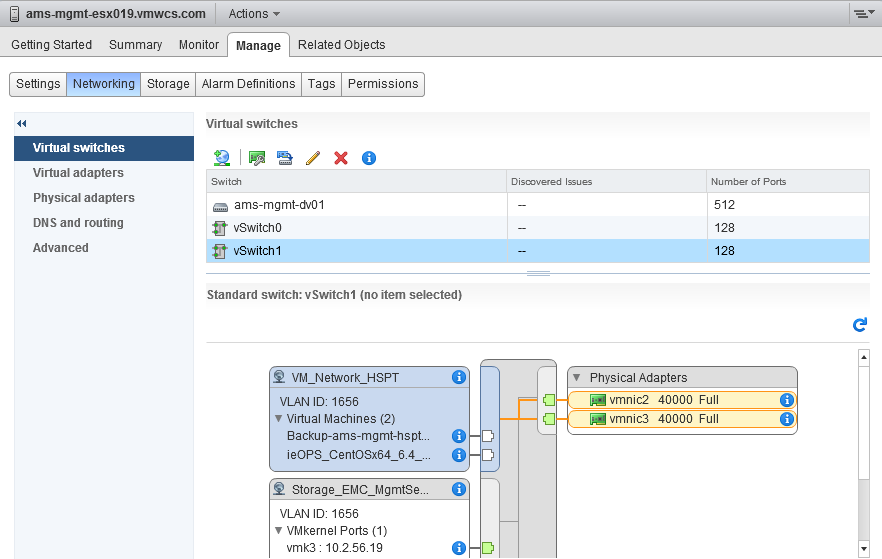

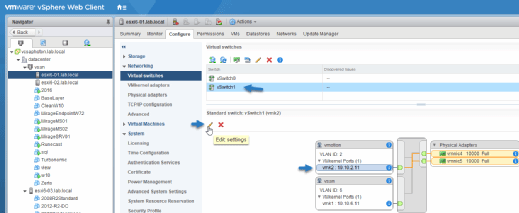

Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description

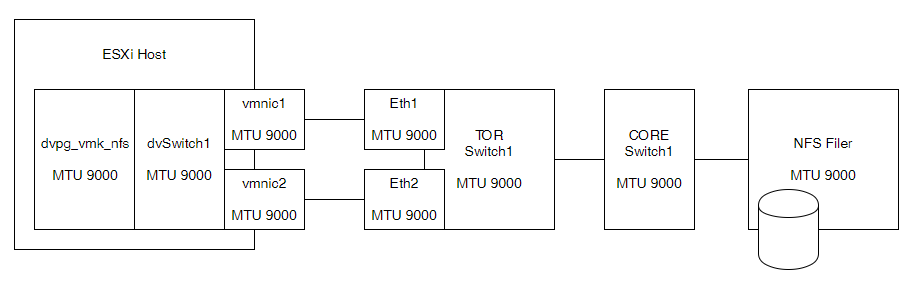

This should have changed the MTU on the uplink interface to 9000: esxcli network nic list The vmk settings were changed to increase MTU from the standard 1500 to 9000. Once ESXi was installed, the blade was added to the vDS and a VMkernel interface (vmk) created on the iSCSI network. During this test phase, 9k jumbo frames were successfully passed as verified via ping -l 8972 -f

#VSPHERE MTU 9000 WINDOWS#

The Gen9 server is dropping ICMP frames larger than 2344 bytes with the DF bit set.īefore ESXi was installed on the new blade, Windows Server 2016 was installed for Hyper-V testing. All blades except the Gen9 can successfully pass 9k frames to the NetApp. MTU end-to-end on the parent Nexus 9k and the FEX ports is set at 9216.

We run jumbo frames (9000-byte MTU) for our iSCSI SAN. My problem was with the 534M, but it’s possible that the 536FLB would have the same issue detailed here. Hardware note: the Gen9 is outfitted with onboard 536FLB (Broadcom 57840 based) and 534M Mezzanine (Broadcom 57810) Converged Network Adapters (CNAs). We recently purchased a BL460c Gen9 and have run into problems adding it into the cluster. Each blade is running VMware vSphere 6.0 Enterprise Plus, with virtual Distributed Switches (vDS). We have recently transitioned from using HP 6120xg switches for uplinks to a pair of Cisco B22HP Fabric Extenders connected to parent Nexus 93180YC-EX switches. I admin a handful of blades in an HP BladeSystem.

0 kommentar(er)

0 kommentar(er)